There’s a common simplification in AI discussions today: chain some API calls together, wrap them in a loop, and you’ve built an agent that can perform tasks autonomously.

Well, this usually works for demos and controlled environments, but it falls short when faced with actual enterprise systems.

Enterprise software — particularly CRM platforms — contains decades of accumulated business logic, complex data relationships, and domain-specific workflows that resist simple automation approaches. And until recently, we’ve lacked rigorous evaluation of how modern LLM agents actually perform in these environments.

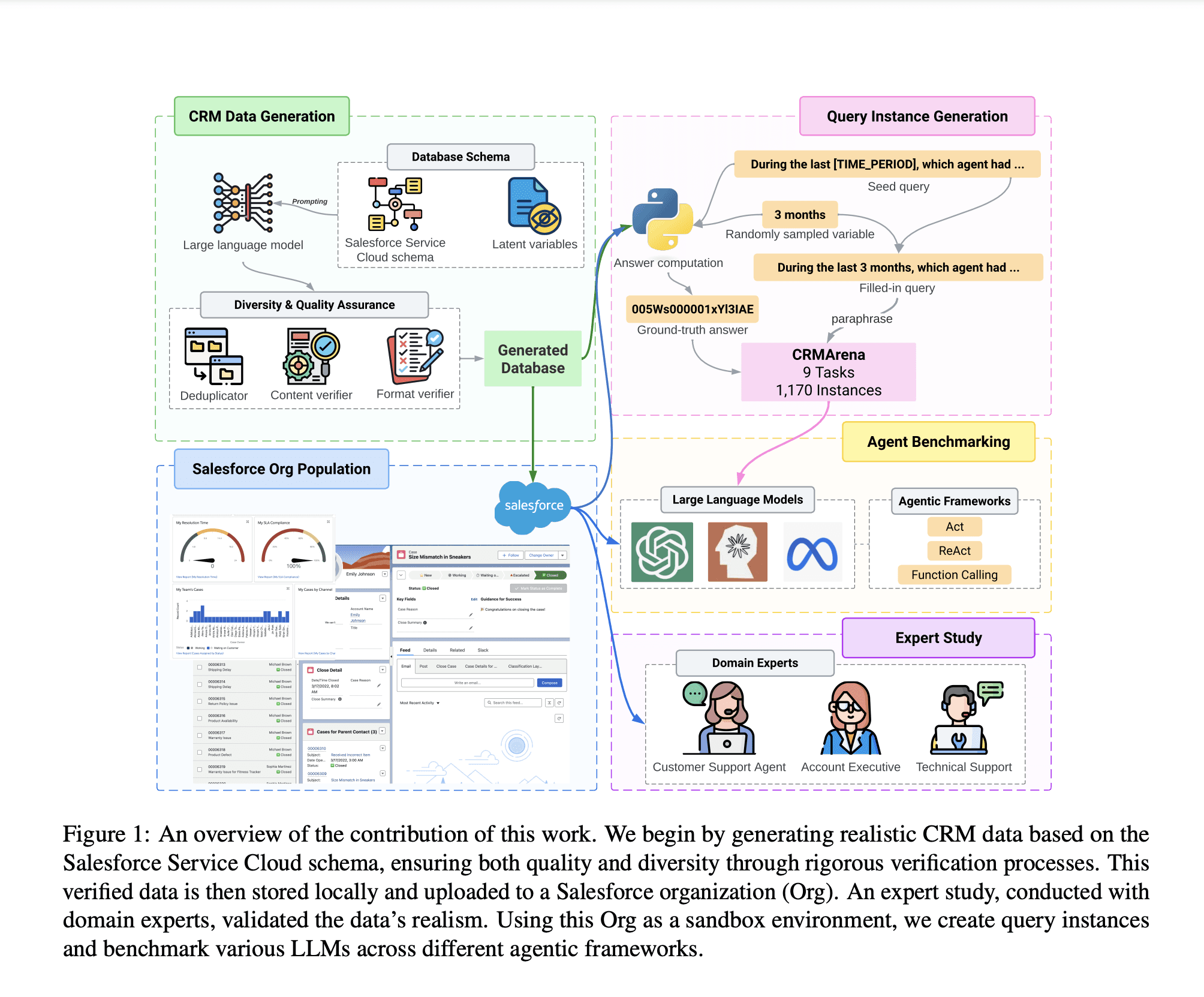

The recently published CRMArena benchmark by Salesforce AI Research changes this.

Many AI benchmarks focus on theoretical tasks, but the CRMArena paper shifts the spotlight to real-world CRM workflows — the kind that businesses actually depend on. It evaluates LLMs in a simulated Salesforce Service Cloud with 16 interconnected business objects and evaluated leading LLM agents across nine common customer service tasks.

The study revealed that even state-of-the-art models like GPT-4o achieved success rates below 58% using ReAct prompting, improving to only 65% when equipped with manually-crafted function-calling tools.

These results confirm what many of us working in enterprise software have observed firsthand — building effective AI agents for business systems requires more than API orchestration. It demands understanding of complex data relationships, multi-step reasoning across business objects, and system-specific knowledge that current agent frameworks typically don’t address.

Current approaches to agent architecture

Most contemporary agent frameworks combine prompt engineering with API orchestration, using an LLM as the “brain” to decide which external functions to call and when. The architecture typically involves a planning phase where the model determines actions, followed by execution through API calls, and interpretation of results to inform the next decision.

This approach shows impressive results in controlled settings.

We’ve seen agents book flights, schedule appointments, perform web searches, and handle basic shopping tasks. These demos can make it seem like we’re close to having general-purpose AI assistants that can navigate any software system.

However, these demonstrations operate in constrained environments with clear objectives and limited complexity. Even seemingly advanced agents navigating websites or performing multi-step tasks are dealing with relatively structured data and predictable interactions.

But the limitations become apparent when we consider what these simplified environments lack. There’s rarely any need to understand complex database relationships, maintain context across dozens of interrelated objects, or interpret domain-specific business rules not explicitly stated in the interaction.

This creates a huge gap between the capabilities shown in demos and the requirements of enterprise systems.

A booking agent that can select flights through a standard API is fundamentally different from a CRM agent that needs to understand relationships between accounts, contacts, opportunities, and dozens of other objects while respecting business rules about data access, process flows, and compliance requirements.

The CRMArena benchmark offers the first rigorous evaluation of LLM agents in a realistic CRM environment.

Unlike previous benchmarks using simplified data models, CRMArena built a complete Salesforce environment modeled after real-world implementations.

The benchmark includes 16 business objects with an average of 1.31 dependencies per object — standard CRM entities like accounts, contacts, cases, and knowledge articles, all interconnected in ways that mirror actual enterprise deployments. This complexity significantly exceeds previous benchmarks (WorkArena had 7 objects with 0.86 dependencies, while Tau-Bench had just 3 objects with 0.67 dependencies).

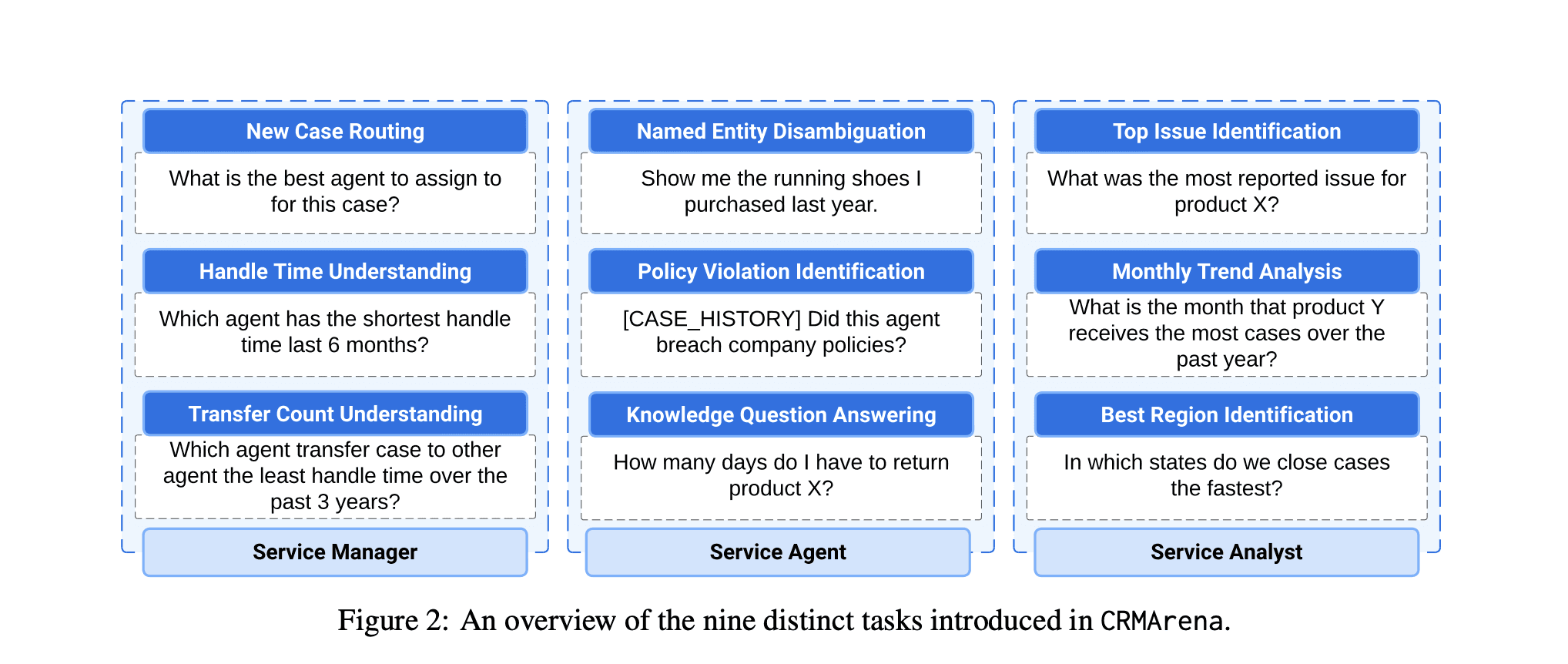

The researchers designed nine tasks across three professional personas:

-

Service Manager tasks (analyzing agent performance, resource allocation)

-

Service Agent tasks (customer inquiry resolution, policy checking)

-

Service Analyst tasks (trend analysis, issue identification)

Domain experts validated the environment, with 90% rating it as “Realistic” or “Very Realistic” compared to actual Salesforce deployments they work with daily.

The performance results showed clear limitations:

-

Using the ReAct framework, the best model (o1) achieved only 57.7% success across all tasks

-

With manually-crafted function-calling tools, performance improved to 64.3%

-

Weaker LLMs often performed worse when equipped with function-calling capabilities

-

Performance varied dramatically across task types, with some seeing near-zero success rates

These findings show that even leading LLM agents struggle with tasks that CRM professionals handle routinely.

The gap is substantial enough to make current agent architectures impractical for unsupervised enterprise deployment.

To me, it reveals specific technical challenges that must be addressed to build effective enterprise agents:

Complex data interdependencies create navigation problems unlike anything in simplified environments.

When a case object connects to accounts, contacts, agents, knowledge articles, and products — each with their own relationships — the agent must maintain a mental model of this entire network to perform basic tasks.

For example, a question like “What was the most frequent issue with Flex Yoga Mat in Q2?” requires understanding relationships between products, issues, cases, and time periods. This demands sophisticated context management that maintains awareness of how different objects relate to each other during multi-step operations.

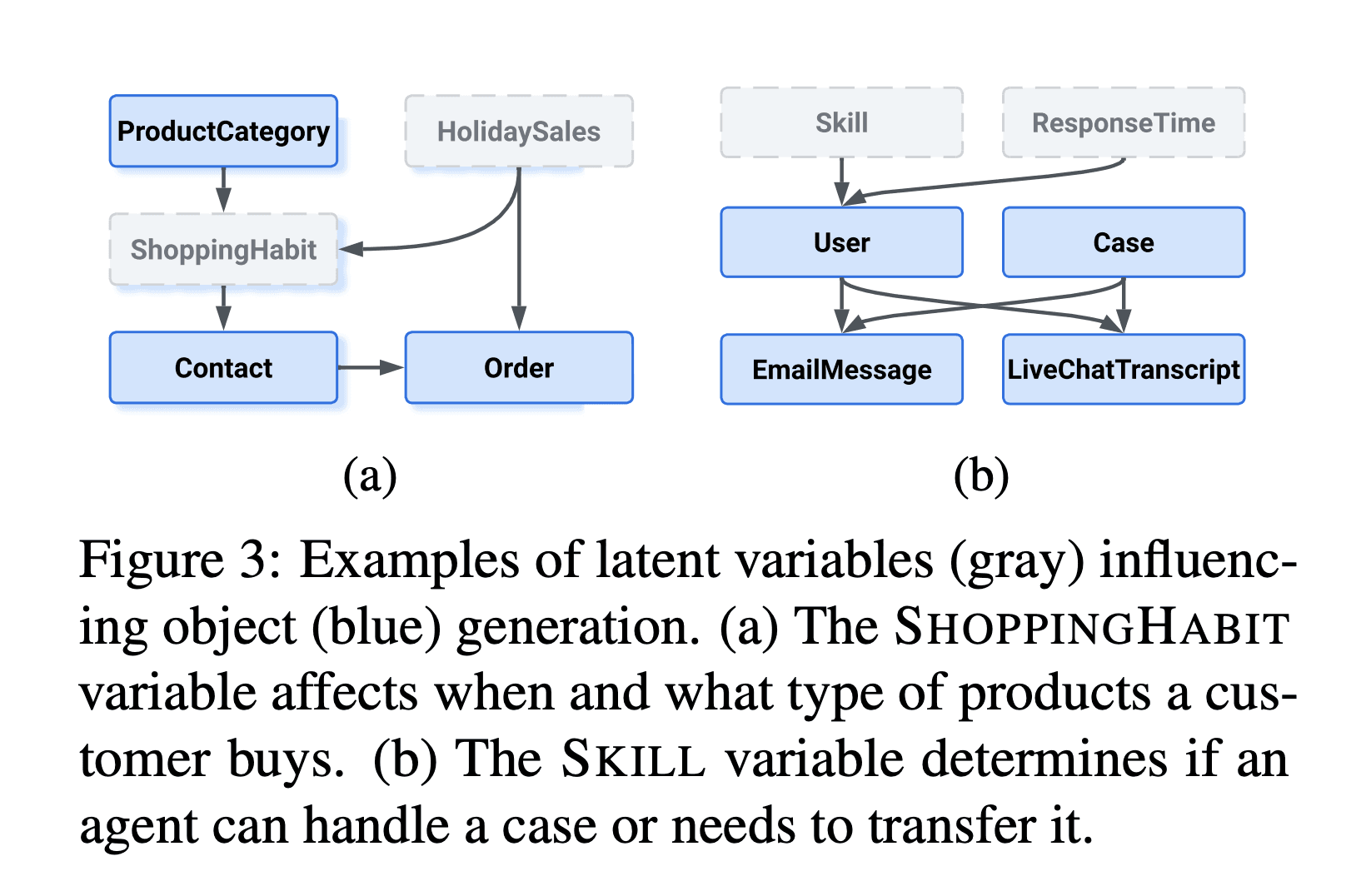

Hidden causal relationships exist in real business data as implicit patterns not formally defined in database schemas.

CRMArena modeled these through latent variables like SHOPPINGHABIT and SKILL, which influence customer purchasing patterns and agent capabilities. These hidden variables create patterns that agents must infer rather than directly query.

Effective agents need operational understanding of business processes, grasping that a case isn’t just a database record but the manifestation of a customer issue with expected handling procedures, SLAs, and resolution paths.

Multi-step reasoning across object boundaries proves particularly difficult.

Finding regions where cases are closed fastest requires:

-

Identifying relevant cases within a time period

-

Extracting region information from associated accounts

-

Calculating closure times for each case

-

Aggregating by region to determine averages

-

Comparing across regions to identify top performers

This sequence demands both technical understanding of how to query each data point and business knowledge of how objects relate conceptually. Agents must understand query languages like SOQL/SOSL and specific schema implementations deeply enough to construct valid queries for complex information retrieval.

Agents must also recognize when clarification is needed versus when business context provides implicit parameters. Enterprise requests often contain implicit assumptions, underspecified requirements, and domain-specific terminology.

In the study, even non-answerable queries posed a challenge — cases where the requested information didn’t exist.

In real business contexts, determining that an answer doesn’t exist is often as important as finding an answer when it does. This requires business judgment that goes beyond simple data retrieval.

Parting thoughts

The CRMArena study shows that while the idea of chaining API calls in a loop captures the basic concept of agent functionality, it understates the complexity required for real business environments.

To be truly useful in production, enterprise AI agents must go beyond raw model performance. They must:

Handle complex data schemas with foreign keys and interdependent records

-

Operate across multiple platforms while maintaining secure access controls

-

Guarantee consistency and reliability—businesses cannot afford unpredictable AI performance

-

Meet strict compliance and security requirements (GDPR, HIPAA, SOC 2, etc.)

-

And they must do all of this while delivering measurable business ROI at a sustainable cost.

For it is only by acknowledging and addressing the complexity of business processes and data relationships that we can design systems that deliver genuine value in enterprise settings.

That is what we do at realfast. Build agents that drive real business outcomes.